An interview with Ardy Arianpour: Building the future of health data

People ask me what gets me out of bed every morning. I have this big vision about finding ways to use data to improve the health of everyone on the planet. Yes, that’s right, all 7.7 billion of us.

My mission on a daily basis is to find projects that help me on the path towards making that vision a reality. I’m always on the lookout for people who are also dreaming about making an impact on the whole world. I bumped into one such person recently, when I was attending the Future of Individualised Medicine conference in the USA. That person is Ardy Arianpour, CEO and co-founder of a startup called Seqster that I believe could make a significant contribution to making my vision a reality over the long term. I interviewed Ardy to hear more about his story and the amazing possibilities with health data that he dreams of bringing to our lives.

1. What is Seqster?

Products such as mint.com, which enable people to bring all their personal finance data in one place have enabled so many people to manage their finances. We believe that Seqster is the mint.com of your health. We are a person-centric interoperability platform that seamlessly brings together all your medical records (EHR), baseline genetic (DNA), continuous monitoring and wearable data in one place. From a business standpoint we’re a SaaS platform like “The Salesforce.com for healthcare”. We provide a turnkey solution for any payer, provider or clinical research entity since “Everyone is seeking health data”. We empower people to collect, own and share their health data on their terms.

2. So Seqster is another attempt at a personal health record (PHR) like Microsoft’s failed attempt with Healthvault?

Microsoft’s HealthVault and Google Health were great ideas, but their timing was wrong. The connectivity wasn’t there and neither was the utility. In a way, it’s also the problem with Apple Health Records. Seqster transcends those PHRs for three reasons:

a. First, we’ve built the person-centric interoperability platform that can retrieve chain of custody data from any digital source. We’re not just dealing with self-reported data like every other PHR that can be inaccurate and cumbersome. By putting the person at the center of healthcare, we give them the tools to disrupt their own data siloes and bring in not only longitudinal data but also multi-dimensional and multi-generational data.

b. Second, our data is dynamic. Everything is updated in real time to reflect your current health. One site, one log in. You never have to sign in twice.

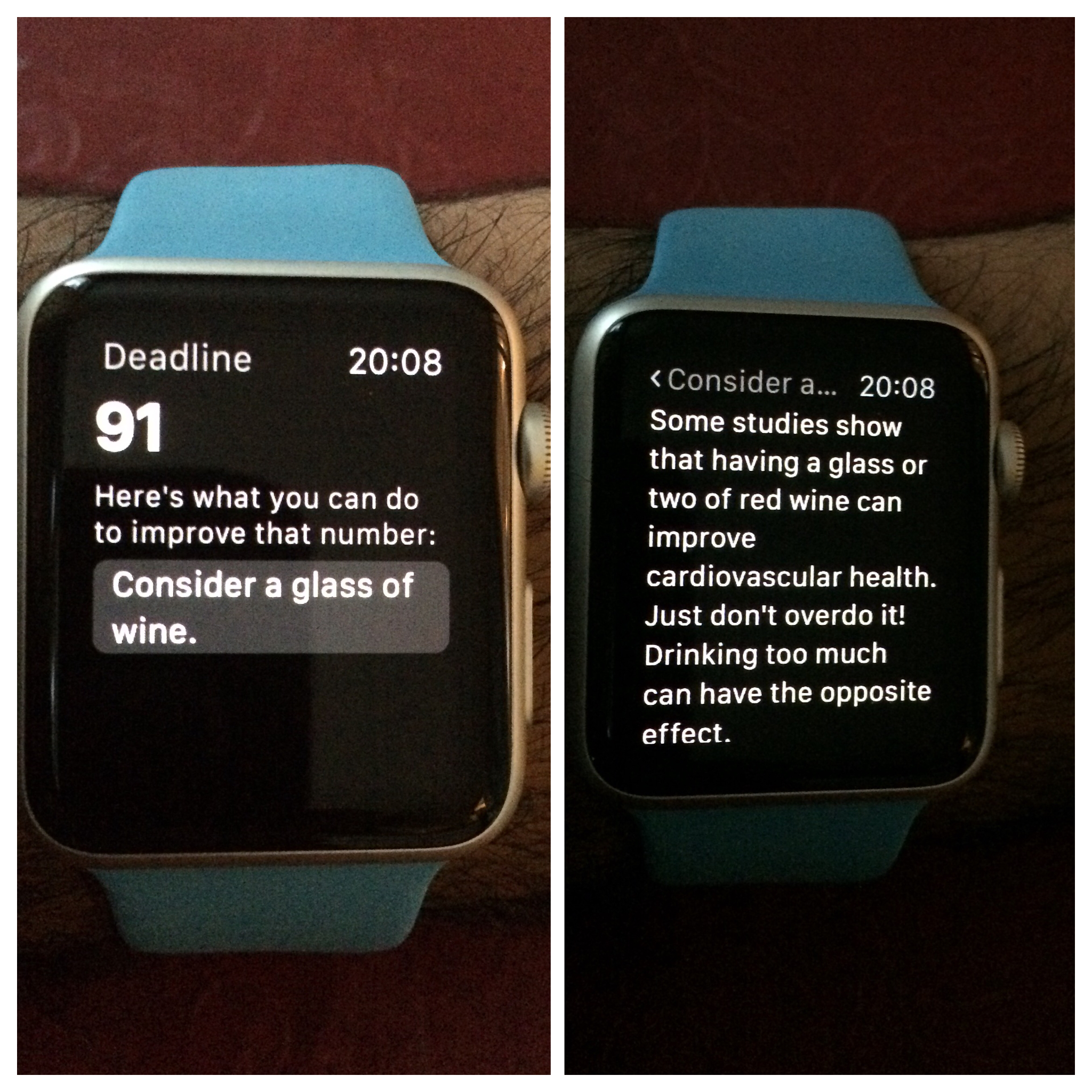

c. Third, we generate new insights which is tough to do unless you have the high quality data coming directly form multiples sources. For example, we have integrated the American Heart Association’s Life Simple Seven to give you dynamic insights into your heart health plus actionable recommendations based on their guidelines.

3. Why do you believe Seqster will succeed when so many others (often with big budgets have failed)?

The first reason that we will succeed is our team. We have achieved previous successes in implementing clinical and consumer genetic testing at nationwide scale. In the genetics market we’ve been working on data standardization and sharing for the last decade so we approached this challenge from a completely different vantage point. We didn’t set out to solve interoperability, but did it completely by accident.

Next, we have achieved nationwide access in the USA to over 3000 hospitals integrated as well as over 45,000 small doctor offices and medical clinics. In the past few years we have surpassed over 100M patient records, 30M+ direct to consumer DNA / genetic tests and 100M+ wearables. invaluable utility by giving people a legal framework to share their health data with their family members, caregivers, physicians, or even with clinical trials if they want.

All we are doing is shedding light on what we call “Dark Data”- the data that is already existing on all of us and hidden up until now.

3. Your background has been primarily in Genomics, where you’ve done sterling work in driving BRCA genetic testing across the United States. Is Seqster of interest mainly to those who have had some kind of genetic test?

Not at all. Seqster is for the healthcare consumers. We’re all healthcare consumers in some way. Having said that, as you may have noted, the “Seq” in Seqster comes from our background in genome sequencing. We originally had the idea that we could create a place for the over 30M individuals who had done some kind of genetic test to take ownership of their data and to incentivize people who have not yet had a genetic test to get sequenced. However, we realized that genetic data without high quality, high fidelity clinical health data is useless. The highest quality data is the data that comes directly from your doctor’s office or hospital. This combined with your sequence data and your fitness data is a powerful tool for better health for everyone.

4. Wherever I travel in the world, from Brazil to the USA to Australia, the same challenge about health data comes up in conversations. The challenge of getting different computer systems in healthcare to share patient data with each other, otherwise known more formally as “interoperability” – can Seqster really help to solve this challenge or is this a pipe dream?

It was a dream for us as well until we cracked the code on person-centric interoperability. What is amazing is we can bring our technology to anywhere in the world right now as long as the data exists. Imagine people everywhere and how overnight we change healthcare and health outcomes if they had access to their health data from any device, Android, Apple or web-based. Imagine that your kids and grandkids have a full health history that they can take to their next doctor visit. How powerful can that be? That is Seqster. We help you seek out your health data, no matter where you are or where your data resides.

5. So what was the moment in your life that compelled you to start Seqster?

In 2011 I was at a barbeque with a bunch of physicians and they asked what I did for a living. I told them about my own DNA testing experience and background in genomics. Quickly the conversation went to how can we make DNA data actionable and relevant to both themselves and their patients. The next day I go for a run and couldn’t stop thinking about that conversation and how if I owned all my data in one place would make it meaningful for me. I come home and was watching the movie “The Italian Job” and heard the word Napster in the film, being a sequencing guy and seeking out info I immediately thought of “Seqster” and typed it in godaddy.com and bought Seqster.com for $9.99. The tailwinds were not there to do anything with it until January of 2016 when I decided to put a team together to start building the future of health data.

6. What has been the biggest barrier in your journey at Seqster so far, and have you been able to overcome it?

Have you seen the movie Bohemian Rhapsody? We’re like the band Queen – we’re misfits and underdogs. No one believes that we solved this small $30 billion problem called interoperability until they try Seqster for themselves. The real barrier right now is getting Seqster into the right hands. As people start to catch onto the fact that Seqster solves some of their biggest pain points, we will overcome the technology adoption barrier. I am so excited about new possibilities that are emerging for us to make a contribution to advancing the way health data gets collated, shared and used. Stay tuned, we have exciting news to share over the next few months.

7. What has the reaction to Seqster been? Who are the most sceptical, and who seem to be the biggest advocates?

We have a funny story to share here. About three years ago when we started Seqster, we told Dr. Eric Topol from Scripps Research what we wanted to do and he told us that he didn’t believe that we could do it. Three years later after hearing some of the buzz he asked to meet with us and try Seqster for himself. His tweet the next day after trying Seqster says it all. We couldn’t be prouder.

1st time I've been able to get my medical data from 1985 -> present

— Eric Topol (@EricTopol) August 21, 2018

4 health systems @ScrippsHealth @UCSDHealth @ClevelandClinic @umichmedicine + @23andMe + @fitbit +@MyFitnessPal with labs from diff't systems all connected @seqster (trying it <24 hrs)

step in the right direction pic.twitter.com/25W0cDqKOr

8. Lots of startups are developing digital health products but few are designing with patients as partners. Tell us more about how you involve patients in the design of your services?

Absolutely! We couldn’t agree more. I believe that many digital health companies fail because they don’t start with the patient in mind. From day one Seqster has been about empowering people to collect, own, and share their data on their terms. Our design is unique because we spent time with thousands of patients, caregivers and physicians to develop a person-centric interface that is simple and intuitive.

9. The future of healthcare is seen as a world where patients have much more control over their health, and in managing their health. What role could Seqster play in making that future a reality?

We had several chronically ill patients use Seqster to manage their health and gather all their medical records from multiple health systems within minutes. Some feedback was as simple as having one site and one login so that they can immediately access their entire medical record from a single platform. A number of patients told us that they found lab results that had values outside of normal range which their doctors never told them about. When we heard this, we felt like we were on the verge of bringing aspects of precision medicine to the masses. It definitely resonated very well with our vision of the future of healthcare being driven by the patient.

10. Fast forward 20 years to 2039, what would you want the legacy of Seqster to be in terms of impact on the world?

In 20 years by having all your health data in one place, Seqster’s legacy will be known as the technology that changed healthcare. Our technology will improve care by delivering accurate medical records instantaneously upon request by any provider anywhere. All the data barriers will be removed. Everyone will have access to their health information no matter where they are or where their data is stored. Your health data will follow you wherever you go.

[Disclosure: I have no commercial ties to any of the individuals or organizations mentioned in this post]