An interview with Jo Aggarwal: Building a safe chatbot for mental health

We can now converse with machines in the form of chatbots. Some of you might have used a chatbot when visiting a company’s website. They have even entered the world of healthcare. I note that the pharmaceutical company, Lupin, has rolled out Anya, India’s first chatbot for disease awareness, in this case, for Diabetes. Even for mental health, chatbots have recently been developed, such as Woebot, Wysa and Youper. It’s an interesting concept and given the unmet need around the world, these could be an additional tool that might help make a difference in someone’s life. However, there was a recent BBC article highlighting how two of the most well known chatbots (Woebot and Wysa) don’t always perform well when children use the service. I’ve performed my own real world testing of these chatbots in the past, and gotten to know the people who have created these products. So after the BBC article got published, Jo Aggarwal, CEO and co-founder of Touchkin, the company that has made Wysa, got back in touch with me to discuss trust and safety when using chatbots. It was such an insightful conversation, I offered to interview her for this blog post as I think the story of how a chatbot for mental health is developed, deployed and maintained is a complex and fascinating journey.

1. How safe is Wysa, from your perspective?

Given all the attention this topic typically receives, and its own importance to us, I think it is really important to understand first what we mean by safety. For us, Wysa being safe means having comfort around three questions. First, is it doing what it is designed to do, well enough, for the audience it’s been designed for? Second, how have users been involved in Wysa’s design and how are their interests safeguarded? And third, how do we identify and handle ‘edge cases’ where Wysa might need to serve a user - even if it’s not meant to be used as such?

Let’s start with the first question. Wysa is an interactive journal, focused on emotional wellbeing, that lets people talk about their mood, and talk through their worries or negative thoughts. It has been designed and tested for a 13+ audience, where for instance, it asks users to take parental consent as a part of its terms and conditions for users under 18. It cannot, and should not, be used for crisis support, or by users who are children - those who are less than 12 years old. This distinction is important, because it directs product design in terms of the choice of content as well as the kind of things Wysa would listen for. For its intended audience and expected use in self-help, Wysa provides an interactive experience that is far superior to current alternatives: worksheets, writing in journals, or reading educational material. We’re also gradually building an evidence base here on how well it works, through independent research.

The answer to the second question needs a bit more description of how Wysa is actually built. Here, we follow a user-centred design process that is underpinned by a strong, recognised clinical safety standard.

When we launched Wysa, it was for a 13+ audience, and we tested it with an adolescent user group as a co-design effort. For each new pathway and every model added in Wysa, we continue to test the safety against a defined risk matrix developed as a part of our clinical safety process. This is aligned to the DCB 0129 and DCB 0160 standards of clinical safety, which are recommended for use by NHS Digital.

As a result of this process, we developed some pretty stringent safety-related design and testing steps during product design:

At the time of writing a Wysa conversation or tool concept, the first script is reviewed by a clinician to identify safety issues, specifically - any times when this could be contra-indicated, or be a trigger, and alternative pathways for such conditions.

When a development version of a new Wysa conversation is produced, the clinicians review it again specifically from an adherence to clinical process and potential safety issues as per our risk matrix.

Each aspect of the risk matrix has test cases. For instance, if the risk is that using Wysa may increase the risk of self harm in a person, we run two test cases - one where a person is intending self harm but it has not been detected as such (normal statements) and one where self-harm statements detected from the past are run through the Wysa conversation, at every Wysa node or ‘question id’. This is typically done on a training set of a few thousand user statements. A team then tags the response for appropriateness. A 90% appropriateness level is considered adequate for the next step of review.

The inappropriate statements (typically less than 10%) are then reviewed for safety, where the question asked is - will this inappropriate statement increase the risk of the user indulging in harmful behavior? If there is even one such case, the Wysa conversation pathway is redesigned to prevent this and the process is repeated.

The output of this process is shared with a psychologist and any contentious issues are escalated to our Clinical Safety Officer.

Equally important for safety, of course, is the third question. How do we handle ‘out of scope’ user input, for example, if the user talks about suicidal thoughts, self-harm, or abuse? What can we do if Wysa isn’t able to catch this well enough?

To deal with this question, we did a lot of work to extend the scope of Wysa so that it does listen for self-harm and suicidal thoughts, as well as abuse in general. On recognising this kind of input, Wysa gives an empathetic response, clarifies that it is a bot and unable to deal with such serious situations, and signpost to external helplines. It’s important to note that this is not Wysa’s core purpose - and it will probably never be able to detect all crisis situations 100% - neither can Siri or Google Assistant or any other Artificial Intelligence (AI) solution. That doesn’t make these solutions unsafe, for their expected use. But even here, our clinical safety standard would mean that even if the technology fails, we need to ensure it does not cause cause harm - or in our case, increase the risk of harmful behavior. Hence, all Wysa’s statements and content modules are tested against safety cases to ensure that they do not increase risk of harmful behavior even if the AI fails.

We watch this very closely, and add content or listening models where we feel coverage is not enough, and Wysa needs to extend. This was the case specifically with the BBC article, where we will now relax our stand that we will never take personally identifiable data from users, explicitly listen (and check) for age, and if under 12 direct them out of Wysa towards specialist services.

So how safe is Wysa? It is safe within its expected use, and the design process follows a defined safety standard to minimize risk on an ongoing basis. In case more serious issues are identified, Wysa directs users to more appropriate services - and makes sure at the very least it does not increase the risk of harmful behaviour.

2. In plain English, what can Wysa do today and what can’t it do?

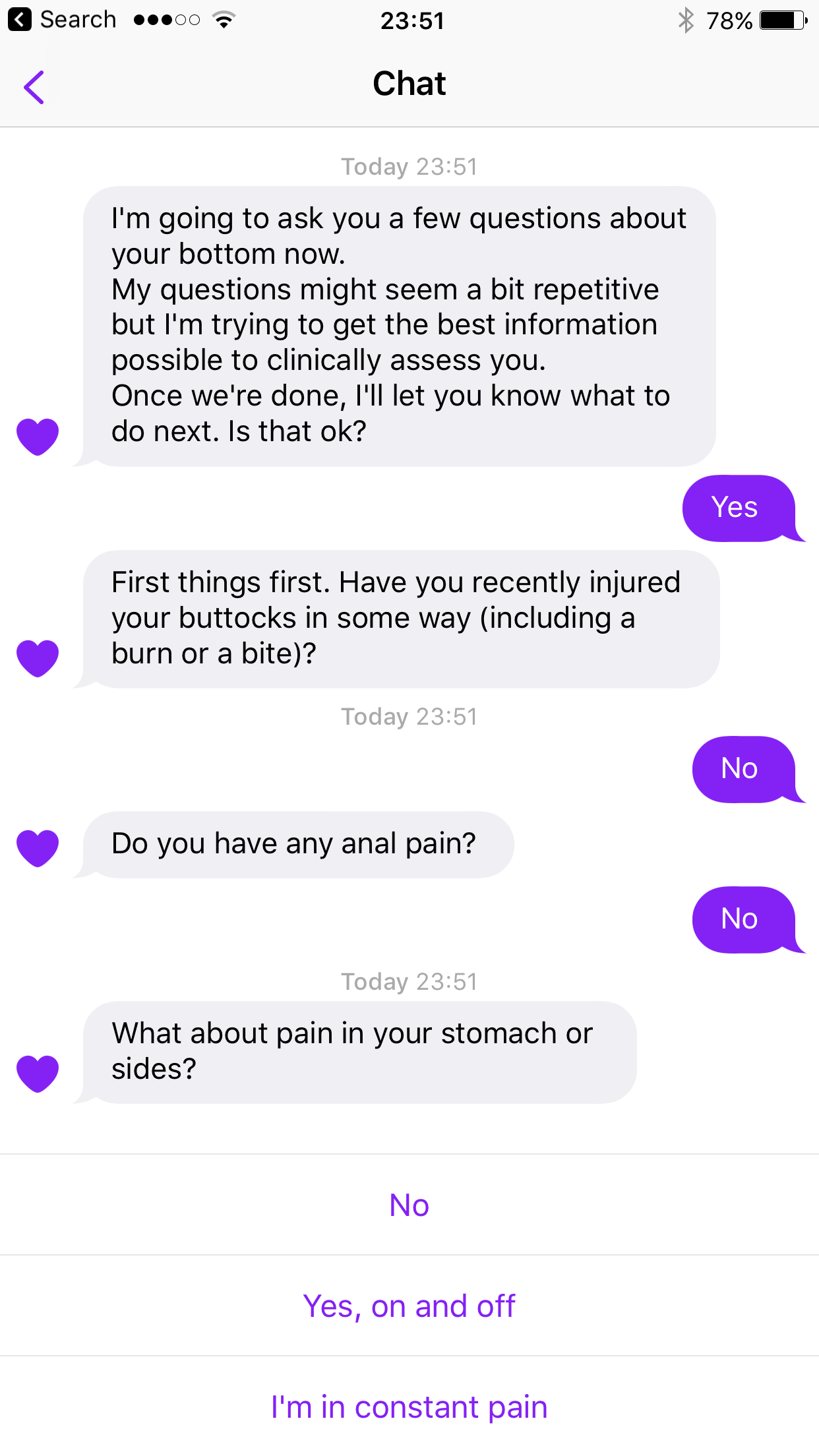

Wysa is a journal married to a self-help workbook, with a conversational interface. It is a more user friendly version of a worksheet - asking mostly the same questions with added models to provide different paths if, for instance, a person is anxious about exams or grieving for a dog that died.

It is an easy way to learn and practice self help techniques - to vent and observe our thoughts, practice gratitude or mindfulness, learn to accept your emotions as valid and find the positive intent in the most negative thoughts.

Wysa doesn’t always understand context - it definitely will not pass the Turing test for ‘appearing to be completely human’. That is definitely not its intended purpose, and we’re careful in telling users that they’re talking to a bot (or as they often tell us, a penguin).

Secondly, Wysa is definitely not intended for crisis support. A small percentage of people do talk to Wysa about self harm or suicidal thoughts, who are given an empathetic response and directed to helplines.

Beyond self harm, detecting sexual and physical abuse statements is a hard AI problem - there are no models globally that do this well. For instance ‘My boyfriend hurts me’ may be emotional, physical, or sexual. Also, most abuse statements that people share with Wysa tend to be in the past: ‘I was abused when I was 12’ needs a very different response from ‘I was abused and I am 12’. Our response here is currently to appreciate the courage it takes to share something like this, ask a user if they are in crisis, and if yes, say that as a bot Wysa is not suited for a crisis and offer a list of helplines.

3. Has Wysa been developed specifically for children? How have children been involved in the development of the product?

No, Wysa hasn’t been developed specifically for children.

However, as I mentioned earlier, we have co-designed with a range of users, including adolescents.

4. What exactly have you done when you’ve designed Wysa with users?

For us, the biggest risk was that someone’s data may be leaked and therefore cause them harm. To deal with this, we took the hard decision of not taking any personally identifiable data at all from users, because of which they also started trusting Wysa. This meant that we had to compromise on certain parts of the product design, but we felt it was a tradeoff well worth making.

After launch, for the first few months, Wysa was an invite-only app, where a number of these features were tested first from a safety perspective. For example, SOS detection and pathways to helplines were a part of the first release of Wysa, which our clinical team saw as a prerequisite for launch.

Since then, design continues to be led by users. For the first million conversations, Wysa stayed a beta product, as we didn’t have enough of a response base to test new pathways. There is no one ‘launch’ of Wysa - it is continuously being developed and improved based on what people talk to it. For instance, the initial version of Wysa did not handle abuse (physical or sexual) at all as it was not expected that people would talk to it about these things. When they began to, we created pathways to deal with these in consultation with experts.

An example of a co-design initiative with adolescents was a study with Safe Lab at Columbia University to understand how at-risk youth would interact with Wysa and the different nuances of language used by these youth.

4. Can a user of Wysa really trust it in a crisis? What happens when Wysa makes a mistake and doesn’t provide an appropriate response?

People should not use Wysa in a crisis - it is not intended for this purpose. We keep reinforcing this message across various channels: on the website, the app descriptions on Google Play or the iTunes App Store, even responses to user reviews or on Twitter.

However, anyone who receives information about a crisis has a responsibility to do the most that they can to signpost the user to those who can help. Most of the time, Wysa will do this appropriately - we measure how well each month, and keep working to improve this. The important thing is that Wysa should not make things worse even when it misdetects, so users should not be unsafe ie. we should not increase the risk of harmful behaviour.

One of the things we are adding based on suggestions from clinicians is a direct SOS button to helplines so users have another path when they recognise they are in crisis, so the dependency on Wysa to recognise a crisis in conversation is lower. This is being co-designed with adolescents and clinicians to ensure that it is visible, but so that the presence of such a button does not act as a trigger.

For inappropriate responses, we constantly improve and also handle cases where the if user shares that Wysa’s response was wrong, respond in a way that places the onus entirely on Wysa. If a user objects to a path Wysa is taking, saying this is not helpful or this is making me feel worse, immediately change the path; emphasise that it is Wysa’s, not the user’s mistake; and that Wysa is a bot that is still learning. We closely track where and when this happens, and any responses that meet our criteria for a safety hazard are immediately raised to our clinical safety process which includes review with children’s mental health professionals.

We constantly strive to improve our detection, and are also starting to collaborate with other people dealing with similar issues and create a common pool of resources.

5. I understand that Wysa uses AI. I also note that there are so many discussions around the world relating to trust (or lack of it) in products and services that use AI. A user wants to trust a product, and if it’s health related, then trust becomes even more critical. What have you done as a company to ensure that Wysa (and the AI behind the scenes) can be trusted?

You’re so right about all so many discussions about AI, how this data is used, and how it can be misused. We explicitly tell users that their chats stays private (not just anonymous), that this will never be shared with third parties. In line with GDPR, we also give users the right to ask for their data to be deleted.

After downloading, there is no sign-in. We don’t collect any personally identifiable data about the user: you just give yourself a nickname and start chatting with Wysa. The first conversation reinforces this message, and this really helps in building trust as well as engagement.

AI of the generative variety will not be ready for products like Wysa for a long time - perhaps never. They have in the past turned racist or worse. The use of AI in applications like Wysa is limited to detection and classification of user free text, not generating ‘advice’. So the AI here is auditable, testable, quantifiable - not something that may suddenly learn to go rogue. We feel that trust is based on honesty, so we do our best to be honest about the technical limitations of Wysa.

Every Wysa response and question goes through a clinical safety process, and is designed and reviewed by a clinical psychologist. For example, we place links to journal articles in each tool and technique that we share with the user.

6. What could you and your peers who make products like this do to foster greater trust in these products?

As a field, the use of conversational AI agents in mental health is very new, and growing fast. There is great concern around privacy, so anonymity and security of data is key.

After that, it is important to conduct rigorous independent trials of the product and share data openly. A peer reviewed mixed method study of Wysa’s efficacy has been recently published in JMIR, for this reason, and we working with universities to further develop these. It’s important that advancements in this field are science-driven.

Lastly, we need to be very transparent about the limitations of these products - clear on what they can and cannot do. These products are not a replacement for professional mental health support - they are more of a gym, where people learn and practice proven, effective techniques to cope with distress.

7. What could regulators to foster an environment where we as a user feel reassured that these chatbots are going to work as we expect them to?

Leading from your question above, there is a big opportunity to come together and share standards, tools, models and resources.

For example, if a user enters a search term around suicide in Google, or posts about self-harm on Instagram, maybe we can have a common library of Natural Language Processing (NLP) models to recognise and provide an appropriate response?

Going further, maybe we can provide this as an open-source to resource to anyone building a chatbot that children might use? Could this be a public project, funded and sponsored by government agencies, or a regulator?

In addition, there are several other roles a regulator could play. They could fund research that proves efficacy, defines standards and outlines the proof required (the NICE guidelines recently released are a great example), or even a regulatory sandbox where technology providers, health institutions and public agencies come together and experiment before coming to a view.

8. Your website mentions that “Wysa is... your 4 am friend, For when you have no one to talk to..” – Shouldn’t we be working in society to provide more human support for people who have no one to talk to? Surely, everyone would prefer to deal with a human than a bot? Is there really a need for something like Wysa?

We believed the same to be true. Wysa was not born of a hypothesis that a bot could help - it was an accidental discovery.

We started our work in mental health to simply detect depression through AI and connect people to therapy. We did a trial in semi-rural India, and were able to use the way a person’s phone moved about to detect depression to a 90% accuracy. To get the sensor data from the phone, we needed an app, which we built as a simple mood-logging chatbot.

Three months in, we checked on the progress of the 30 people we had detected with moderate to severe depression and whose doctor had prescribed therapy. It turned out that only one of them took therapy. The rest were okay with being prescribed antidepressants but for different reasons, ranging from access to stigma, did not take therapy. All of them, however, continued to use the chatbot, and months later reported to feeling better.

This was the genesis of Wysa - we didn’t want to be the reason for a spurt in anti-depressant sales, so we bid farewell to the cool AI tech we were doing, and began to realise that it didn’t matter if people were clinically depressed - everyone has stressors, and we all need to develop our mental health skills.

Wysa has had 40 million conversations with about 800,000 people so far - growing entirely through word of mouth. We have understood some things about human support along the way.

For users ready to talk to another person about their inner experience, there is nothing as useful as a compassionate ear, the ability to share without being judged. Human interactions, however, seem fraught with opinions and judgements. When we struggle emotionally, it affects our self image - for some people, it is easier to talk to an anonymous AI interface, which is kind of an extension of ourselves, than another person. For example, this study found that US Veterans were three times as likely to reveal their PTSD to a bot as a human: But still human support is key - so we run weekly Ask Me Anything (AMA) sessions on the top topics that Wysa users propose, to discuss every week with a mental health professional. We had a recent AMA where over 500 teenagers shared their concerns about sharing their mental health issues or sexuality with their parents. Even within Wysa, we encourage users to create a support system outside.

Still, the most frequent user story for Wysa is someone troubled with worries or negative thoughts at 4 am, unable to sleep, not wanting to wake someone up, scrolling social media compulsively and feeling worse. People share how they now talk to Wysa to break the negative cycle and use the sleep meditations to drift off. That is why we call it your 4 am friend.

9. Do you think there is enough room in the market for multiple chatbots in mental health?

I think there is a need for multiple conversational interfaces, different styles and content. We have only scratched the surface, only just begun. Some of these issues that we are grappling with today are like the issues people used to grapple with in the early days of ecommerce - each company solving for ‘hygiene factors’ and safety through their own algorithms. I think over time many of the AI models will become standardised, and bots will work for different use cases - from building emotional resilience skills, to targeted support for substance abuse.

10. How do you see the future of support for mental health, in terms of technology, not just products like Wysa, but generally, what might the future look like in 2030?

The first thing that comes to mind is that we will need to turn the tide from the damage caused by technology on mental health. I think there will be a backlash against addictive technologies, I am seeing the tech giants becoming conscious of the mental health impact of making their products addictive, and facing the pressure to change.

I hope that by 2030, safeguarding mental health will become a part of the design ethos of a product, much as accessibility and privacy has become in the last 15 years. By 2030, human computer interfaces will look very different, and voice and language barriers will be fewer.

Whenever there is a trend, there is also a counter trend. So while technologies will play a central role in creating large scale early mental health support - especially crossing stigma, language and literacy barriers in countries like India and China, we will also see social prescribing gain ground. Walks in the park or art circles become prescriptions for better mental health, and people will have to be prescribed non-tech activities because so much of people’s lives are on their devices.

[Disclosure: I have no commercial ties to any of the individuals or organizations mentioned in this post]